The performance testing process aims to identify performance bottlenecks in software applications so you can eliminate them. It is an integral part of performance engineering. Performance tests include load testing and mainly cover the performance of the following aspects of a software application under a particular workload:

Speed - How fast the application's response time is.

Scalability- The maximum user load the application can handle.

Stability - Whether the application can remain stable under varying loads.

When testers conduct performance testing, they closely monitor response time, resource usage, and reliability. This guide will cover performance testing, what it is, and why it is so important. It will explore various types of performance testing and common performance issues.

Finally, it will detail how to plan and design performance tests and configure the test environment for effective performance testing.

The functionality and features of your software system are essential, but it's not the only important part of the application. Acceptable performance criteria include reliability, effective resource usage, quick response time, and scalability.

Performance testing aims to debug the software, identify and eliminate performance bottlenecks, and uncover areas that need work before you release the software to the production environment.

Without adequate performance testing, including stress testing, the software could suffer from issues like:

Poor system performance when multiple user requests take place.

Inconsistencies that depend on which operating system is used.

A lack of usability.

Performance testing will determine whether the software meets performance test criteria under expected workloads. Software released into the production environment with poor performance metrics is often due to inadequate performance testing. When this happens, it could lead to a failure to meet sales goals.

Mission-critical software for life-saving medical equipment or space launch programs is at serious risk if the performance testing is poor. This type of equipment should be thoroughly performance tested to ensure that it will run without challenges for long periods.

According to research, nearly 60% of Fortune 500 companies experience around 1.6 hours of downtime every week. Considering average labor costs, that alone could cost the company almost $1 million a week.

A substantial loss of over $46 million per annum could have been avoided with quality performance testing.

There are various types of performance testing used for different goals. Here is a basic rundown of these tests.

This software testing involves testing the application in a test environment with extreme workloads to see how it copes under high data processing and traffic conditions. The goal of running stress tests is to identify the breaking point of the software.

Stress testing is similar to capacity testing, which focuses on whether the application can handle the traffic it was designed for.

Also known as soak testing, endurance testing aims to ensure the software can handle the expected load by concurrent users over a long period. Soak tests aim to check for system issues like memory leaks, which can impair system performance or cause failures. With adequate soak testing, you can avoid this.

Load tests check the software's ability to function at optimal performance under anticipated user loads. It measures system performance as the workload increases with concurrent users or increased transactions.

Load tests will monitor the system for response time and staying power. Load testing aims to identify performance bottlenecks before the software is launched into production systems.

Despite similarities, the workload is the main difference between stress, endurance and load tests' goals. Testers run stress tests under high-traffic conditions and do endurance testing with the expected number of users over time. Load testing is essential in identifying performance issues.

Spike testing involves performance test scenarios that determine how the software reacts to sudden spikes in the load generated by concurrent users.

With volume testing, test teams populate the database in the test environment with large volumes of data and monitor the overall behavior and system performance. The goal is to check how the system performs under varying database volumes.

The goal of scalability testing is to determine how effective the web and mobile applications are in scaling up and supporting an increased user load. It's crucial to help plan additional capacity on the software system. Without scalability testing, the is no way of knowing what will happen when the number of users increases over time.

Most performance issues revolve around the software system's behavior in terms of speed, load time, response time, and scalability. Let's explore this further:

The load time is how long the application takes to start. You should keep this to an absolute minimum. Even though it's impossible to guarantee a load time of under a minute in some larger software systems, it's vital to keep it down to a few seconds if possible.

The response time is how long it takes from the time the user inputs data into the application until it outputs a response to that input. It should be relatively fast because users will lose interest if it takes too long, thus downgrading the software application's effectiveness.

Scalability testing is essential for any software that may expand or increase the number of users. The software has poor scalability if the system performance is compromised when the number of users increases. For this reason, load testing is an integral part of scalability testing.

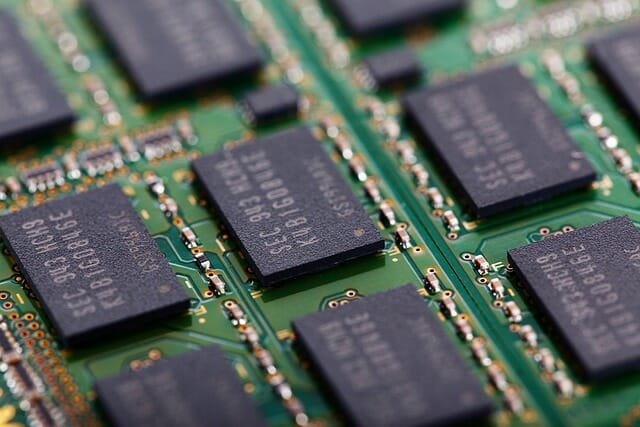

In software testing, bottlenecks are obstructions that degrade system performance. Hardware issues or faulty code can cause this. The key to fixing this performance issue is using functional testing to identify whether it is a software or hardware problem so you can fix it. Some common bottlenecks are:

Disk usage

Memory utilization

Network utilization

CPU utilization

Operating system utilization

If you look at the performance testing problems above, it becomes apparent that speed is a common factor in most of them.

Speed is one of the most crucial attributes of any application's performance metrics. Performance testing helps ensure that the software runs quickly enough to maintain the user's attention.

Regardless of the performance testing methodology you choose, the objective for performance tests is always the same. The initial process helps identify performance acceptance criteria, and once you execute it, it helps determine whether the software system meets the performance criteria.

Furthermore, performance testing can help with software system comparisons and help identify areas of the system that degrade its performance. The following steps are required when conducting performance testing:

It's crucial to know your physical test environment, as well as your production environment, before you start the testing process.

You should also know the available testing tools, hardware, and software and understand the network configurations used in the performance testing environment.

This knowledge is crucial because it will help the performance tester create suitable test scenarios and execute tests efficiently. It will also help identify possible challenges test engineers may encounter during the performance testing process.

Test scripts are powerful test tools, but only if they cover the proper acceptance criteria, including objectives and constraints for testing criteria like resource allocation, response times, and throughput. Identify project success criteria in addition to these objectives and constraints.

Because the initial project specifications may not include enough performance benchmarks, performance engineers should be able to set additional performance goals and criteria throughout the process.

Identify how various users will use the system and identify key testing scenarios for different potential use cases. Simulate multiple end users to plan performance test data and test matrics.

Before executing test scripts, you must prepare the testing environment and arrange performance test tools and other resources.

Software testing is only as good as the overall test design. In this step, you should create performance tests based on the initial design.

Use a testing tool or manually execute and monitor the test scripts. Monitor the performance, and make notes on where you can make improvements.

Once you've run test scripts and monitored the outcome, consolidate and analyze the results. Share these test results with stakeholders and fine-tune them. Test again after improvements are made and keep tweaking it until it meets the criteria.

During performance testing, you should monitor the following basic parameters:

Memory usage - How much physical memory is available to process on a computer.

Processor usage - How much time the processor spends on non-idle threads.

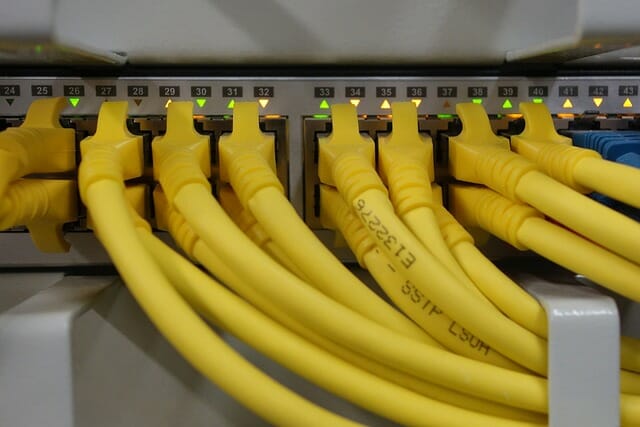

Bandwidth - The current performance is measured by the bits per second used by the network interface.

Disk time - How much disk time is executing read or write requests?

Disk queue length - The average number of read and write requests in the queue during an interval.

Memory pages - How many pages are written to or read from the disk to resolve hard page faults? This happens when code is called up and retrieved from a disk other than the current working set.

Page faults - What is the rate at which the processor processes faults? This also happens when a process calls up code from outside the current working set.

Committed memory - How much virtual memory is used?

Private bytes - How many bytes are uniquely allocated to a specific process? This is used to measure memory leaks.

CPU interrupts/second - The average amount of hardware interrupts received and processed by the processor every second.

Network bytes/second - The rate at which the interface sends and receives bytes, including framing characters.

Network output queue - How long the output packet queue is in packets. It should be under two; otherwise, it indicates a problem with bottlenecking.

Response time - How much time passes from when a user enters a request until the first character is received?

Throughput/second - The rate at which the computer or network receives requests.

Connection pooling - How many user requests are met by pooled connections? The more, the better the performance is.

Hit ratios - How many SQL statements are handled by cached data instead of costly I/O operations?

Hits/second - How many hits on a web server in each second of the load test?

Maximum active sessions - How many sessions can be active at one time?

Thread counts - How many threads are active and running at one time? In performance testing, thread counts help measure the application's health.

Database locks - Locking tables and databases should be closely monitored and fine-tuned.

Rollback segment - How much data you can roll back at any given time?

Top waits - These are monitored to ascertain the wait times that can be reduced when looking at how quickly data can be retrieved from memory.

Garbage collection - To ensure efficiency, monitoring how unused memory is returned to the system is essential.

An essential tip for performance testing is to test early and often. A single set of tests cannot possibly tell developers what they need to know. A successful performance testing process consists of a series of repeated tests, which should include:

Test from the start of the project. Never wait until the project winds down and start rushing the performance testing.

Run several performance tests to determine metric averages and ensure consistent findings.

Run performance tests on individual units and modules, not just completed projects.

Applications usually involve several systems, including servers, services, and databases. It's crucial to test every unit together and separately.

In addition to repeating testing, here are other performance testing best practices:

Performance testing should involve developers, test teams, and stakeholders.

Determine how the results will affect the end users who will be using the software eventually, not just the test environment servers.

Use baseline measurements to provide a starting point in performance testing.

Conduct performance tests in an environment as close to the production systems as possible.

Keep the performance test environment separate from the QA environment.

Ensure the test environment remains as consistent as possible.

Don't just calculate averages; track outliers as well. It could reveal potential failures.

Consider your audience when you prepare reports that share performance testing results.

Remember to include any software and system changes in each report.

Performance testing has drastically developed in recent years, and there are various testing tools to choose from. Your chosen tool will depend on factors like license cost, hardware requirements, support, etc.

Some performance testing tools focus on varied scriptless testing tools. Others leverage advanced AI capabilities as a testing tool to identify performance issues. Keep in mind that no performance testing tool will do everything you need—research tools to find the best fit or choose a reputable company with a scalable testing offer.

Performance testing and performance monitoring are essential during the software development process. It has to take place during the development and should be completed before the software is released and marketed. Effective performance testing ensures customer satisfaction, thus improving loyalty and retention.

Scriptworks is a testing interface that offers superior performance testing capabilities and an elegantly simple visual interface. It empowers manual testers to create scalable and reusable automated test packs to tackle the ever-evolving performance testing environment.